I attended the Apache Iceberg Summit 2025 in the US in the past two days. The conference was held at the Hyatt Regency Hotel next to Union Square in downtown San Francisco. This time, two floors of the hotel were rented as the venue. Mainstream companies in the Data field are basically sponsors of the conference, including AWS, Databricks, Snowflake, Cloudera, Dremio, Microsoft, Airbyte, CelerData, Confluent, eltloop, Google, IMB, Minio, Qlik, Redpanda, Starburst, StreamNative, e6data, daft, PuppyGraph, RisingWave, Telmai, wherobots. Sponsorship is a booth that the company exchanged for thoudsands of dollars, and had the opportunity to promote and showcase its products to the audience. According to the known information, I speculate that the sponsorship fee is pretty rich. Even so, there are still many companies that want to spend money but have not been able to grab a spot. The target audience for on-site meetings is 500 people, and the target audience for online meetings is 4000 people. I don’t have overall data, so it’s hard to say how many people are on-site. Sensationally, there are so many people that we have to speak loudly to be heard, and my positions need to be adjusted at any time because I need to make way for others. It can only be said that after Iceberg became the de facto standard in Global Market and Tabular was aggressively acquired by Databricks last year, its popularity has reached a new peak this year.

I spent most of my time chatting with people at the venue, so I neglected to listen to the live session sharing. Fortunately, the session can be watched offline, but now it can only be viewed on the Bizzabo APP. I think the conference organizers will organize it on YouTube later, and the time is to be determined. Below, I will try to share some of my one-sided views based on various inputs, mainly including the outlook of Iceberg v3, AI + DataLake, Streaming + Datalake, HTAP + Datalake, and others. These topics cannot fully represent the main line and overall situation of Iceberg Summit 2025, but only some of my personal understandings.

Iceberg v3 and Beyond

Ryan Blue, as the brightest star in the audience, talked about the core changes of Iceberg v3 in the future.

First, let me add a bit about Ryan’s story. I think he is a person who is extremely focused on technology. It can be said that from 2016 to now, his focus has always been on building cloud-native data lakes. During his 5 years at Netflix, he tried to move the Hive data warehouse to AWS S3. Because S3 did not have atomic rename semantics, Ryan designed and implemented the first S3 job committer [1], which essentially used the Hadoop job committer protocol and S3 MPU interface to replace Hive’s dependence on rename. This solution solves the key issues of Netflix Hive migrating to the cloud, but it is not perfect. For example, the cost of adapting the computing engine is high (different engines need to be modified one by one to adapt to Job Comitter, and Apache Hive Community Edition has not successfully completed Committer adaptation until now). Hive lacks a transaction mechanism, which amplifies incorrect semantics on s3, Hive cannot perform efficient data updates, and Hive’s metadata mechanism causes slow large queries. To solve these issues, Ryan and Danniel implemented a more perfect solution, which is Netflix Iceberg, donated as Apache Iceberg in 2017. The most successful aspect of this project is that all mainstream computing engines in the industry are integrated with Apache Iceberg, bringing users correct and unified data semantics. As we all know, Iceberg eventually became the de facto standard in the lakehouse field. Ryan and Daniel became atypical cases of The Perfectionist Software Engineers achieving financial freedom due to the acquisition of Tabular.

First, Ryan reviewed the summary of the past year since the Iceberg Summit 2024.

The community has released a total of 16 releases, added 250 contributors, introduced 7 new Committers and 5 new PMC members. As far as I know, it is a challenging task to become Iceberg’s Committers and PMCs, with high requirements for understanding the historical context, design rationality, engineering rigor, and cross-organizational & projects collaboration. In the past few years, as the community’s popularity has increased and the number of contributors has expanded, the number of contributors, Committers, and PMCs has increased to varying degrees. This year, the number of contributors has doubled, with new Committers and new PMC members reaching 7 and 5, which should be the best data I have observed in recent years.

In terms of community achievements in the past year, Iceberg Java removed the integration of Hive and Pig, because Apache Hive repository has built-in Iceberg integration, and Pig is no longer used. The support of Kafka connect provides a lightweight and simple solution for entering the lake without relying on flink; with the popularity of AI, the contribution and popularity of PyIceberg are also increasing. In terms of feature richness, Pyiceberg should be second only to Iceberg Java, because the demand weight from AI ecosystems such as Pytorch, Ray, and Daft is indeed very high, after all, any Data Infra project needs to seize the trend of AI. Iceberg-rust has been adopted and contributed by many infra companies in the Rust ecosystem, such as Apple, Databend, Risingwave, and Lakekeeper. Rust was first initiated by RisingWave, and contributions from Nvidia, RisingWave, and Databend are very intensive. Apple is one of the few companies in the Spark Native solution that chooses DataFusion instead of Gluten + Velox solution, and is also one of the companies with the most intensive contributions and internal applications in the Iceberg community. There may be more investment in this area. The Iceberg-cpp project was launched by SingData in China last year. As far as I know, many commercial companies have chosen to implement internal rather than open source C++ versions to varying degrees, considering the higher priority of commercial products & marketing, which is reasonable. But I want to give a strong thumbs up to SingData, hoping that the future open-source iceberg-cpp will reduce the access threshold of the cpp ecosystem and help more commercial products achieve more extreme iceberg performance.

Later, Ryan introduced the core changes of Iceberg v3 in turn.

Geo Type

Iceberg v3 will introduce Geo type to express spatial or geographic information, typical scenarios such as GPS coordinates, planned paths, regional divisions, etc. Initially proposed by Apple and Wherobots in the Iceberg community, Parquet and GeoParquet joined together to jointly determine the final solution. The usage in PoC (not necessarily representing the final solution) is as follows:

CREATE TABLE iceberg.geom_table(

id int,

geom geometry

) USING ICEBERG PARTITIONED BY (xz2(geom, 7));

INSERT INTO iceberg.geom_table VALUES

(1, 'POINT(1 2)'),

(2, 'LINESTRING(1 2, 3 4)'),

(3, 'POLYGON((0 0, 0 1, 1 1, 1 0, 0 0))');

For more context, please refer to the mailing list [2] and design document [3]. This feature will make Iceberg more widely used in spatial and geographical scenarios. I talked to Bill Zhang from Cloudera, and he said that Geo Type is almost a rigid demand feature for many customers in the North American market.

Variant Data Type

Variant Data Type is mainly used to solve the problem of Iceberg’s efficient access to JSON data. Similar to Geo Type, it can be regarded as a key milestone for Apache Iceberg to expand from Structured Data to Semi-Structured Data scenarios.

Snowflake’s customers have a great demand for semi-structured Data Analysis, and Apache Iceberg has become a key part of Snowflake’s open lakehouse. Therefore, the Snowflake team proposed the variant type, which is also a common requirement of many Data Warehouse & Lakehouse vendors. Databricks (Delta essentially relies on this function), Google, Snowflake, and SingData are jointly promoting this work.

Full Table Encryption

Simply put, it means encrypting all data hosted by Iceberg on s3 (including DataFile, Snapshots, Manifests, JSON) with whole-link data. This way, even if the files on s3 are leaked, user data will not be cracked, maximizing the protection of user privacy and security.

I would like to add that there is a classic application scenario in the AI era. The model service provider may store dialogue information in the cloud for model distillation. Dialogue information is usually extremely sensitive private data, so the Iceberg solution that provides end-to-end encryption would be one of the best choices. Of course, Iceberg is still under development, and I have seen direct implementation of parquet extension to achieve encryption, but there are still many problems. I believe that Full Table Encryption will make Iceberg one of the core solutions in the field of cloud data security and analysis.

Better Positional Deletes.

Ryan thinks there are some shortcomings in the positional delete of iceberg v2 in his speech, for example:

- A large number of writes will cause a large number of deleted files, and the background compaction will not be cleaned up in time, resulting in a unstable decrease in read performance.

- One delete File contains positional deletes for multiple data files, which is not efficient for read performance when joining deletes and data files.

- Deleting files in parquet format is not efficient.

The future solution for v3 is:

- Implement synchronous rather than asynchronous compaction on the write side, which can always control the stability of Read Performance.

- Write the Deletion Vector corresponding to Delete files into the Puffin file format designed by Iceberg specifically for Blob values. This not only avoids deleting a large number of small files, but also avoids converting delete files between parquets.

Row Lineage

We know that Iceberg data is essentially composed of transaction write operations, which can be Append operations, Delete operations, Replace operations, etc. However, the core problem is that the Iceberg v1 & v2 format cannot efficiently restore the data involved in each transaction to Change Log events such as INSERT and DELETE. As a result, the Iceberg format cannot perform incremental synchronization or consumption based on Change Log, nor can it perform Materialized View based on incremental Change Log.

Row Lineage essentially restores the change log events of each transaction by adjusting Iceberg’s specifications, so that Iceberg can also have the ability of incremental computation and consumption. This helps Iceberg to easily solve problems in scenarios such as incremental data replication, incremental refresh of materialized views, and incremental calculation.

Russell from Snowflake led this effort, and the core idea was to track an implicit _row_id and _last_updated_seqnum for each row. These two IDs do not change the original parquet file content, but track each DataFile at the manifest level, so that each row of data in each DataFile can deduce an implicit and unique _row_id . With this unique _row_id, we can track which rows are INSERT or DELETE in a transaction. This is the general idea, for more details, please refer to the design document [4]. The current plan has been voted through, and time is still needed to complete the code design and release. Overall, I think the plan is simple and elegant because the entire plan does not modify any content of the parquet/orc file, and only completes the function through the manifest. It can be said that “always keep the original parquet file content” is the Iceberg design principle, and this simple plan follows this principle.

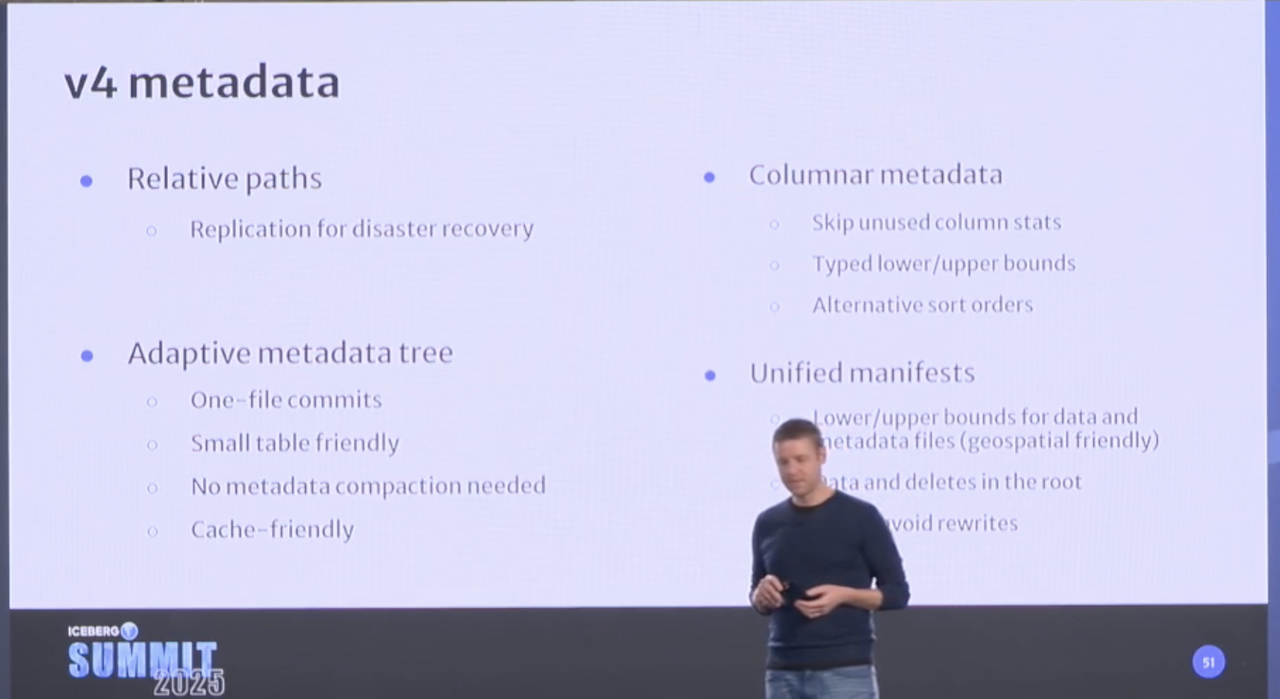

Evolution of Metadata

At the meeting, Ryan briefly explained the planning of Iceberg metadata. The core issues and solutions are:

- For some large blob values, column stats and pruning are unnecessary, so there needs to be an option to skip column stats.

- Iceberg’s classic multi-layer metadata routing mechanism is not friendly to small tables (such as dimission tables). For example, frequent writes to write paths can cause small tables to generate a large number of small files. Reading paths may also affect reading efficiency due to a large number of meta small files. Therefore, in the new metadata design, an adaptive metadata tree will be designed, and the default small table only needs to write and read meta once. Interestingly, the inputs in this scenario come from the real scenario of databricks.

- Actually, I didn’t understand the Unified manifests part :-(

Works with other table formats have pushed iceberg to be better.

Ryan explained the impact and direction of other Table Formats on Iceberg in this section. I believe that “other Table Formats” here include Delta, Snowflake’s internal Formats, and even any other Formats better than Iceberg. Only by “complementing each other’s strengths and weaknesses” can Iceberg have greater leadership. For example, the improvement of Deletion Vector was first inspired by Delta, the single-file commit of small tables to reduce txn latency also came from the real scenarios of Delta, Variant/Geo Data Type came from Snowflake’s real scenarios and was promoted by it, and Row Lineage was designed by Snowflake. As for the direction of Iceberg and Delta, I heard that they will blend and connect with each other. For example, Iceberg’s metadata can serve as a key infrastructure for delta metadata; delta can easily export checkpoints as apache iceberg tables, making delta seamless to reuse Iceberg’s open source ecosystem.

Summary

Due to the Iceberg v2 and Beyond talk, which is a crucial talk that determines the future direction of Iceberg, I spent some space interpreting it. At this conference, I had some very interesting discussions with many people from different companies, including AI + DataLake, Streaming + DataLake, HTAP + DataLake, and other topics. Due to space limitations, I think it might be better to write another article to talk about it. Stay tuned!